The rise of artificial intelligence (AI) tools has revolutionized industries, enhancing efficiency and automation. However, one of the most persistent challenges in AI research and development is the black box problem in AI. This issue involves AI systems making decisions without providing transparent explanations for those decisions. Understanding how this problem impacts the development of AI technologies and the potential solutions available is crucial for building trust in AI and its applications.

Confused about AI decision-making? Let our experts help you implement Explainable AI!

The Black Box Problem in AI: An Overview

At its core, the black box in AI refers to the opacity of decision-making processes within many machine learning models, particularly deep learning models. These models can produce accurate results, but their internal workings are often too complex to interpret. The black box problem in AI becomes particularly problematic in high-stakes fields like healthcare, finance, and law enforcement, where understanding why a decision was made is critical.

- AI tools such as deep neural networks excel at pattern recognition but are often too convoluted for humans to fully understand.

- The complexity arises because these models involve multiple layers of computations and transformations, making it difficult to trace how input data turns into output decisions.

The Impact of the Black Box Problem in AI

The lack of transparency in decision-making creates challenges in a number of ways:

- Accountability: In cases where AI systems make erroneous or harmful decisions, it’s difficult to pinpoint the exact cause of the problem, which complicates accountability and trust.

- Bias: Without insight into how AI systems operate, it becomes harder to identify and correct any biases in the model’s decision-making process.

- Regulatory Concerns: As AI continues to evolve, regulators face challenges in ensuring these technologies comply with ethical standards and laws. Lack of interpretability hinders regulation.

Understanding the Need for Explainable AI

To mitigate the black box problem in AI, researchers are turning to explainable AI (XAI). Explainable AI aims to provide a clear, understandable explanation of how AI systems arrive at their decisions. This is crucial in fostering trust, ensuring fairness, and maintaining accountability.

Struggling with AI transparency? Get AI solutions that are clear & accountable.

How Explainable AI Helps

- Transparency: With explainable AI, users can access insights into how AI systems process data and make predictions.

- Trust and Adoption: As stakeholders can understand AI decision-making, they are more likely to trust AI tools for critical applications.

- Ethical Decisions: Explainable AI promotes ethical decision-making by revealing biases or discriminatory patterns in AI systems.

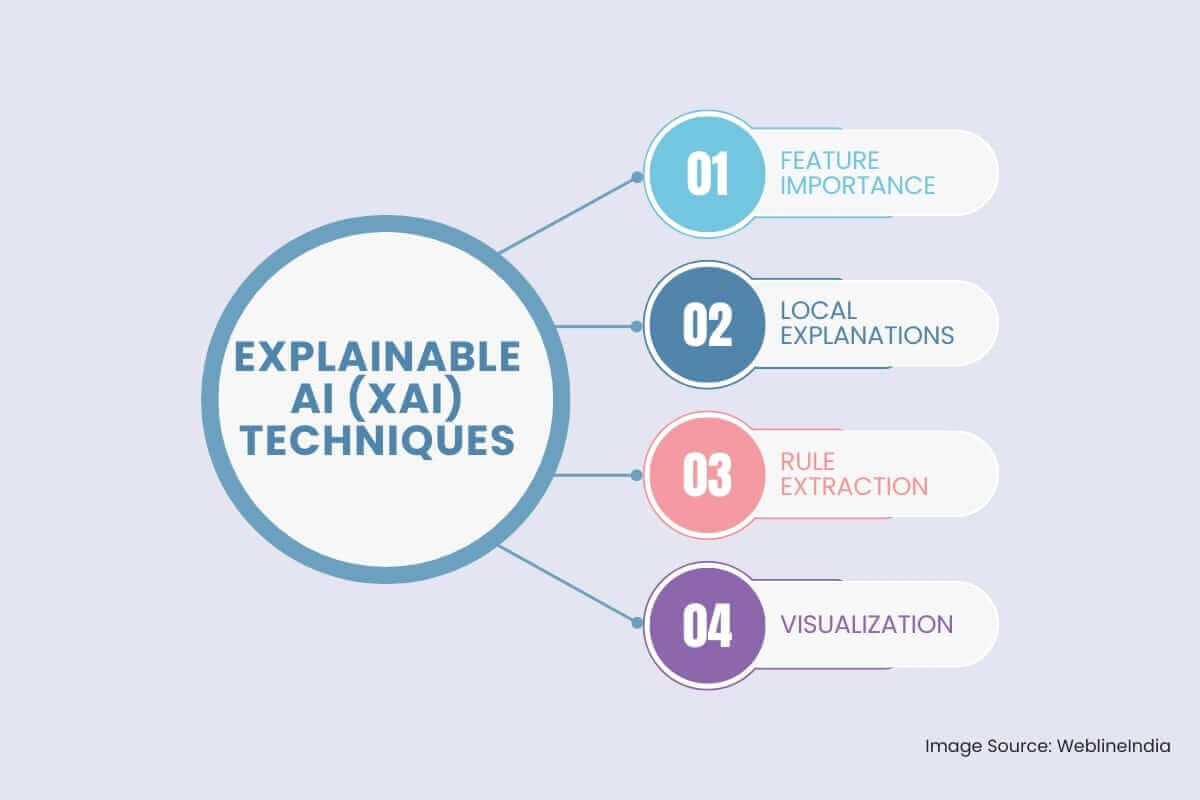

Explainable AI (XAI) Techniques

To address the black box problem, the field of Explainable AI (XAI) has emerged. XAI focuses on developing techniques that make the decision-making processes of AI models more transparent and understandable. Some key XAI techniques include:

- Feature Importance: This technique aims to identify the input features that have the greatest influence on the model’s output.

Permutation Importance: This method assesses the importance of a feature by randomly shuffling its values and observing how this affects the model’s performance.

SHAP (SHapley Additive exPlanations): This game theory-based approach provides a more nuanced understanding of feature importance by attributing the model’s output to individual features.

- Local Explanations: These methods provide explanations for individual predictions made by the model.

LIME (Local Interpretable Model-agnostic Explanations): This technique approximates the model’s behavior locally around a specific data point using a simpler, more interpretable model, such as a linear model or a decision tree.

Anchors: This method identifies a set of conditions (anchors) that, if present, guarantee a specific prediction with high probability.

- Rule Extraction: This approach involves extracting human-readable rules from the trained model.

Decision Tree Induction: This technique involves building a decision tree, which can be easily interpreted by humans, to approximate the model’s predictions.

Rule-based systems: These systems explicitly represent the model’s knowledge in the form of if-then rules.

- Visualization: Visualizing the internal workings of an AI model can provide valuable insights into its decision-making process.

Activation Maps: These visualizations highlight the areas of an image that the model focuses on when making a prediction.

Attention Mechanisms: These techniques can be used to visualize the parts of a text or sequence that the model pays attention to when making a prediction.

Overcoming Challenges in Developing Explainable AI

While explainable AI offers a promising solution, implementing it effectively comes with its own set of challenges:

- Trade-Off Between Accuracy and Explainability: Some of the most powerful AI tools, like deep neural networks, may offer high accuracy but at the cost of being harder to explain. Balancing these two factors remains a significant challenge.

- Complexity of Models: Many AI technologies rely on advanced algorithms that are inherently difficult to explain. Research continues to explore how to make these complex models interpretable without sacrificing their performance.

- Scalability: As AI tools are deployed at larger scales, maintaining effective explanations for every decision made by the system can become overwhelming.

The Role of AI Technologies in Solving the Black Box Problem

AI technologies are constantly evolving to create more transparent and interpretable systems. From developing better algorithms to enhancing data visualization techniques, these technologies are paving the way for solutions to the black box problem in AI.

- Hybrid Models: Combining both explainable and black-box models could provide a balance between performance and transparency. For example, using simpler models for decision-making while relying on more complex models for predictions might allow for both accuracy and interpretability.

- Data Visualization: Effective data visualization can help make AI models more transparent by showing the decision-making process in an accessible format. Interactive tools can allow users to query AI systems for further explanations.

Conclusion: Striving for Transparency in AI Decision-Making

The black box problem in AI presents a significant obstacle to the broader adoption and ethical implementation of AI technologies. While powerful, AI tools must become more transparent to ensure their decisions are understandable, trustworthy, and fair. As AI development progresses, the field of explainable AI continues to evolve, Researchers are exploring innovative solutions to overcome these challenges, making it possible to enjoy the benefits of AI technologies without compromising on accountability or ethics.

Social Hashtags

#ExplainableAI #AITransparency #BlackBoxProblem #XAI #TrustInAI #MachineLearning #DeepLearning #AIModels #SHAP #LIME

Want to make AI more transparent and trustworthy for your business?

Frequently Asked Questions

Testimonials: Hear It Straight From Our Global Clients

Our development processes delivers dynamic solutions to tackle business challenges, optimize costs, and drive digital transformation. Expert-backed solutions enhance client retention and online presence, with proven success stories highlighting real-world problem-solving through innovative applications. Our esteemed Worldwide clients just experienced it.

Awards and Recognitions

While delighted clients are our greatest motivation, industry recognition holds significant value. WeblineIndia has consistently led in technology, with awards and accolades reaffirming our excellence.

OA500 Global Outsourcing Firms 2025, by Outsource Accelerator

Top Software Development Company, by GoodFirms

BEST FINTECH PRODUCT SOLUTION COMPANY - 2022, by GESIA

Awarded as - TOP APP DEVELOPMENT COMPANY IN INDIA of the YEAR 2020, by SoftwareSuggest