Listen to the podcast :

The rapid advancement of technology and professional business process automation services have brought about undeniable benefits like increased efficiency, reduced costs, and improved productivity. However, in general, when automation becomes more widespread, these technologies’ ethical implications must be thoroughly considered.

What are the ethical dilemmas that arise from automation? How can we ensure fairness and responsibility in an increasingly automated world? This blog will delve into the ethics of automation, the challenges it presents and the importance of addressing these issues thoughtfully.

Want to automate your processes without compromising ethics and fairness?

What is Process Automation and How Do Ethics Come into the Picture?

Process automation refers to the use of technology to perform repetitive tasks, traditionally carried out by humans, without the need for constant human intervention. From robotic process automation (RPA) in business operations to artificial intelligence (AI) and machine learning development and algorithms in decision-making, automation has become a game-changer.

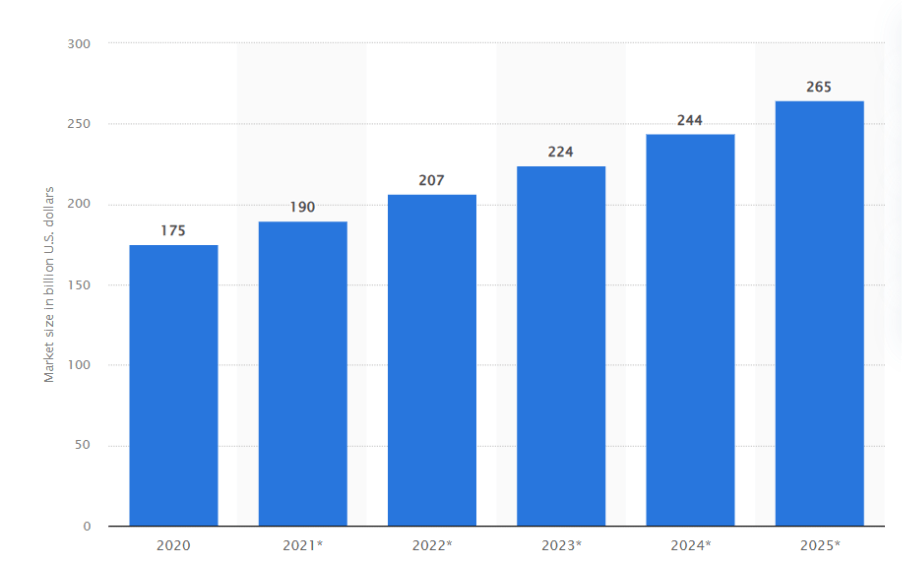

Statista quotes that the global industrial automation market, valued at approximately $175 billion in 2020, is poised for significant expansion. Projected to grow at a compound annual growth rate (CAGR) of about 9%, this market is expected to reach an impressive $265 billion by 2025. This substantial growth underscores the increasing adoption of automation technologies across various industries worldwide.

Source: Statista on Global Industrial Automation Market – 2020 to 2025

While automation is seen as a tool to improve productivity, reduce human error, and streamline processes, it raises significant ethical questions. As machines take over more tasks, how can we ensure they are used responsibly? The ethics of automation involves considering the impact of technology on jobs, fairness, bias, accountability, and human dignity.

Bias and Fairness in Automated Systems

A primary concern in the ethics of automation is the potential for bias in automated systems. AI algorithms, if not properly tested or trained, can perpetuate and even amplify existing biases in society. These biases often stem from flawed or incomplete data, leading to unfair decision-making processes in critical sectors such as hiring, lending, and healthcare.

The Importance of Diverse Testing in AI Model Development

To mitigate biases, it is essential to employ diverse testing in the development of AI models. By exposing these models to a wide range of scenarios, languages, cultural contexts, and real-world examples, organizations can identify and eliminate biases before deployment. For instance:

- Testing across demographic groups: Ensure that AI systems do not favor one group over another.

- Incorporating different languages and cultural contexts: AI systems should be tested in various settings to identify potential cultural biases.

Data Quality and De-biasing Processes in AI Systems

Data quality plays a crucial role in addressing bias in AI systems. Biases in training data—such as underrepresentation of certain demographic groups—can lead to biased outputs. Some strategies to tackle this issue include:

- Curating diverse datasets: Ensure all groups are fairly represented in training data.

- Implementing de-biasing techniques: Use algorithms to identify and correct biases in data before it is used in AI models.

Feedback Systems Recognizing Diversity in AI Interactions

Incorporating feedback systems that recognize and adapt to diverse user interactions is another step toward ensuring fair AI outcomes. Some ways to integrate feedback mechanisms include:

- Gathering user feedback: Continuously collect feedback from users across different demographics.

- Adapting to user interactions: Use feedback to adjust AI models and address any biases that may arise.

Worried about bias in AI-powered automation? Ready for smarter, fairer solutions?

Ethical Considerations in Automation

Ethical considerations in automation are multifaceted, involving both proscriptive and prescriptive ethics. Proscriptive ethics involves avoiding harm by preventing negative consequences, such as discrimination or invasion of privacy, while prescriptive ethics encourages positive actions, such as promoting well-being and fairness.

Proscriptive and Prescriptive Ethics in Automation

In the context of process automation, proscriptive ethics emphasizes avoiding harmful practices like biased decision-making or job displacement without proper safeguards. Prescriptive ethics, on the other hand, urges the use of automation to promote fairness, enhance job satisfaction, and improve accessibility. Ethical frameworks guide automation by helping companies align their practices with moral standards, ensuring that technology serves human interests and does not lead to unintended harm.

The Role of Ethics in Decision-Making Processes in Automation

Integrating ethical principles into automated decision-making processes is essential. Automated systems should be designed not only to optimize performance but also to adhere to ethical guidelines that reflect societal values. This can be achieved by embedding ethical algorithms and ensuring that decisions made by AI systems comply with rules of fairness, transparency, and accountability.

Socially Responsible Automation and Employee-Centered Approaches

As automation increasingly impacts various sectors, businesses must adopt socially responsible practices. This involves considering the effects of automation on employees and the broader community. Key practices include:

- Providing reskilling opportunities: Ensure employees can transition into new roles.

- Adopting fair transition plans: Create strategies that support workers affected by automation.

- Offering support to displaced workers: Provide job placement services and severance packages.

Impact on Employment

One of the most debated aspects of process automation is its impact on employment. On one hand, automation can lead to job displacement, particularly in industries reliant on manual labor. On the other hand, automation can also create new opportunities by enabling workers to focus on more complex and creative tasks, thus driving innovation.

Job Displacement Statistics and Predictions

According to a 2023 report by McKinsey, automation could displace around 400 million jobs globally by 2030. However, the same report also predicts that automation could create 500 million new jobs, albeit in different sectors. This highlights the dual nature of automation’s impact on employment—while some jobs may be lost, new roles requiring different skill sets may emerge.

The Dual Nature of Automation’s Impact on Employment

Automation presents both challenges and opportunities for workers. While some jobs are at risk of being automated, others will require human intervention and oversight. The key to managing this transition is ensuring that workers are adequately supported through retraining and reskilling programs. Automation, when done responsibly, can result in better jobs and improved workplace conditions.

Involving Employees in Decisions About Job Displacement

Ethically, businesses should involve employees in decisions about automation, particularly when it comes to job displacement. Consulting with employees about automation initiatives ensures that their concerns are addressed and that they are treated with dignity throughout the process. Providing support such as severance packages, reskilling opportunities, and job placement services can help mitigate the negative effects of automation on workers.

Technological Advancements and Ethical Challenges

As AI and automation technologies continue to advance, new ethical challenges arise. For instance, automation in sectors like healthcare, finance, and law poses unique challenges that require sector-specific ethical guidelines. Ethical frameworks help companies navigate these challenges, ensuring responsible and accountable decision-making.

Ethical Frameworks for AI and Automation

Ethical frameworks are essential for guiding AI and automation’s responsible use. Proscriptive and prescriptive ethics are key components in ensuring automated systems do not harm individuals or society while promoting positive outcomes. For instance, AI models need to be designed with ethical decision-making procedures that prevent harm and prioritize fairness. These frameworks are increasingly critical as technologies like autonomous trading agents or accident-algorithms operate in high-stakes environments, where decisions must align with societal values and legal standards.

Responsibility and Negligence in AI Systems

One of the most pressing questions in the ethics of automation is who is responsible when things go wrong. In scenarios involving autonomous vehicles or AI-based healthcare systems, accountability is often murky. The social dilemma of autonomous vehicles—whether they should prioritize the lives of passengers over pedestrians, for example—requires careful ethical consideration. Should negligence in programming or design lead to harm, the company behind the AI must be held accountable. However, the robotic theory of mind—AI’s ability to understand and respond to human intentions—could complicate these discussions, making it more difficult to determine who is at fault.

Ethical Challenges in Different Sectors Using AI

Sectors like healthcare, finance, and law present unique ethical challenges. For example, in medical device regulation, the ethical implications of using AI-driven medical technologies to make diagnostic decisions are vast. It’s crucial that developers of such systems adopt a cross-cultural ethical framework to ensure fairness and accuracy in treatment across diverse patient populations. Bias vectors in the training data can lead to incorrect diagnoses or treatments, disproportionately affecting minority groups.

For example, in finance, the rise of autonomous trading agents can introduce risks of market manipulation and instability. Ethical concerns must be addressed with a bottom-up procedure that encourages transparency in AI’s role in these systems, preventing exploitation by powerful entities. Conversely, the financial industry’s push for rapid AI adoption can lead to ethics washing, where companies claim ethical AI use without meaningful action.

Responsible Boundaries in AI Development

Establishing responsible boundaries in AI development is essential to avoid ethical pitfalls. This involves ensuring that AI systems do not operate beyond their intended scope or function, which is critical for maintaining public trust. For instance, AI systems used in public services should be subject to a top-down process that ensures they operate within ethical, legal, and societal boundaries.

As these technologies evolve, businesses and regulators must continually refine their approach to automation and AI ethics. Only through responsible and thoughtful action can we ensure that technological advancements benefit society without causing harm.

Transparency and Accountability

One of the most important aspects of process automation is ensuring transparency and accountability in automated systems. Without transparency, users cannot trust the system, and without accountability, mistakes or biases can go unchecked.

Importance of Explainable AI (XAI) for Transparency

Explainable AI (XAI) is a concept that allows users to understand how AI systems make decisions. By providing clear, understandable explanations for automated decisions, XAI fosters trust and accountability. This transparency is crucial for ensuring that automated systems remain ethical and align with societal values.

Continuous Feedback Mechanisms

Continuous feedback mechanisms are essential for ensuring accountability in automated systems. By regularly collecting feedback from users and analyzing system performance, organizations can refine their models to ensure they continue to operate fairly and ethically. Feedback loops help ensure that systems remain adaptable and responsive to user needs.

Integration of Human Expertise in Automation

While automation can enhance efficiency, human expertise remains vital in ensuring that AI systems operate ethically. Human oversight can fill in technological gaps, ensuring that systems remain aligned with human values and ethical standards. By integrating human expertise, companies can ensure accountability in automated systems.

Want process automation while ensuring ethics?

As automation continues to shape the future of work, balancing its benefits with ethical considerations is crucial. Businesses must adopt strategies that prioritize fairness, transparency, and responsibility while minimizing potential harm.

Companies like WeblineIndia, with their RelyShore Outsourcing Model, demonstrate that it’s possible to automate processes ethically and effectively. You can build a future where technology serves humanity, promotes equality, and fosters innovation by ensuring that ethical principles are at the core of automation.

Social Hashtags

#ProcessAutomation #EthicalAutomation #BusinessEthics #AutomationBestPractices #AIandEthics #ResponsibleAutomation #TechForGood #AutomationStrategy #WeblineIndia

Are you ready to balance efficiency and ethics to make your automation journey truly impactful?

Frequently Asked Questions

Testimonials: Hear It Straight From Our Global Clients

Our development processes delivers dynamic solutions to tackle business challenges, optimize costs, and drive digital transformation. Expert-backed solutions enhance client retention and online presence, with proven success stories highlighting real-world problem-solving through innovative applications. Our esteemed Worldwide clients just experienced it.

Awards and Recognitions

While delighted clients are our greatest motivation, industry recognition holds significant value. WeblineIndia has consistently led in technology, with awards and accolades reaffirming our excellence.

OA500 Global Outsourcing Firms 2025, by Outsource Accelerator

Top Software Development Company, by GoodFirms

BEST FINTECH PRODUCT SOLUTION COMPANY - 2022, by GESIA

Awarded as - TOP APP DEVELOPMENT COMPANY IN INDIA of the YEAR 2020, by SoftwareSuggest