When the market is driven by digitalization, the transformative power of Large Language Models (LLMs) is undeniable. From automating customer service to generating complex code, LLMs promise unprecedented efficiency and innovation. Yet, for many large enterprises, especially those operating in highly regulated sectors like finance, healthcare, and government, the pursuit of Artificial Intelligence (AI) innovation is hampered by a significant, non-negotiable roadblock: data privacy and security.

The default architecture for deploying sophisticated LLMs often involves outsourcing to major public cloud providers. While convenient, this model inherently means that sensitive corporate data, used to train, fine-tune, and interact with these models, must leave the secure confines of the company’s internal network. This exposure is not just a risk; it’s a direct violation of regulatory mandates (like GDPR, HIPAA, and various national data localization laws) and internal compliance policies.

This is where the concept of on-premise LLMs emerges, not merely as an alternative, but as the essential architecture for enterprises determined to be privacy-first and often choosing to hire dedicated team for secure AI implementation. Deploying LLMs directly on corporate-owned, in-house hardware is the definitive solution to achieve absolute data sovereignty, ensuring that proprietary information never leaves the physical and digital control of the organization.

Ready to keep enterprise data private while using AI? Talk to our LLM experts today.

The Great Conflict: Innovation vs. Compliance

The business case for AI is clear: competitive advantage, cost reduction, and superior decision-making. However, the corporate landscape is increasingly defined by strict data governance.

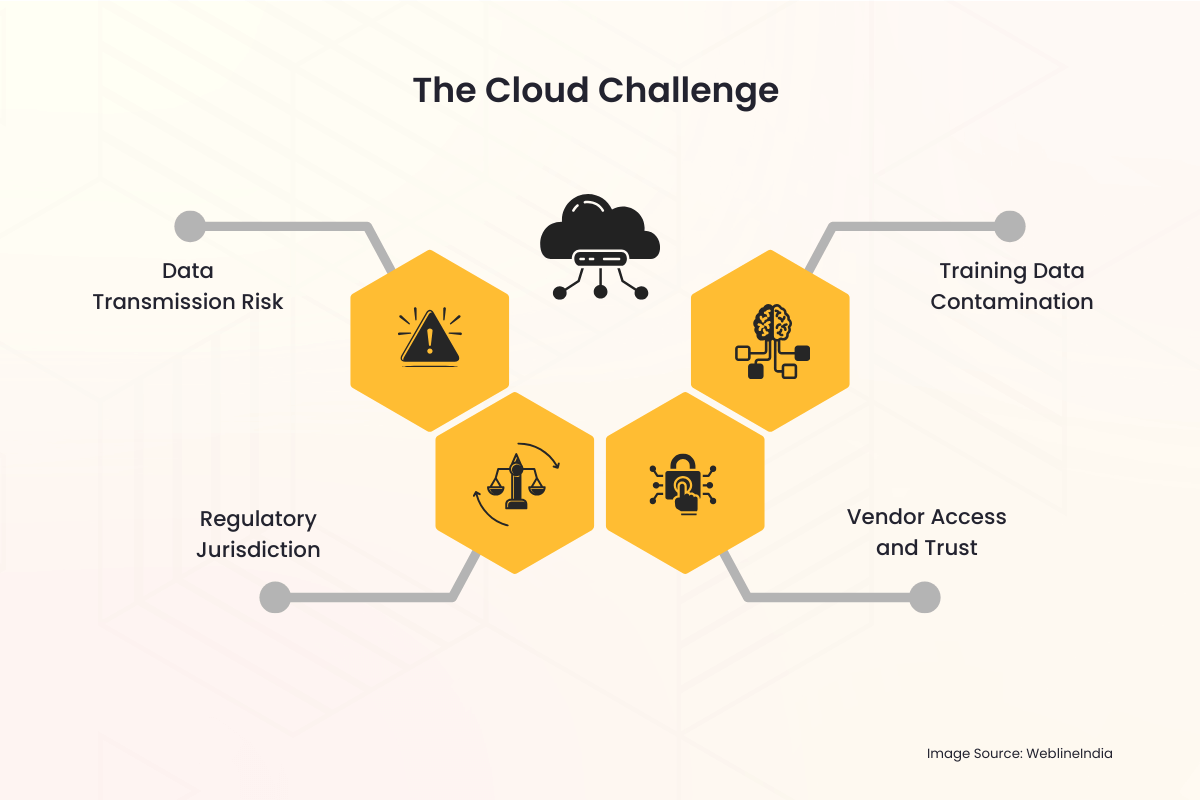

The Cloud Challenge

When an enterprise utilizes a cloud-hosted LLM service, several privacy pitfalls immediately surface:

- Data Transmission Risk: Every query, every input, and the resulting output must traverse the public internet and reside temporarily on a third-party server. This entire process is a potential vector for interception or unauthorized access.

- Regulatory Jurisdiction: Data stored in a different country or jurisdiction than the enterprise’s origin can trigger complex, often conflicting, international laws. Achieving data localization—the requirement for data to be stored and processed within specific borders—becomes virtually impossible with a multinational cloud provider.

- Vendor Access and Trust: Enterprises are forced to trust the cloud vendor’s security protocols, employee access controls, and retention policies. This introduces a third party into the most sensitive data workflows.

- Training Data Contamination: If a company’s proprietary data is used to fine-tune a model hosted in the cloud, there is always a risk that model weights could be inadvertently, or even maliciously, shared or influence the responses of models used by other tenants, leading to data leakage.

These concerns are not theoretical. High-profile incidents of data breaches and the severe penalties enforced by global regulatory bodies have elevated data privacy from an IT headache to a core boardroom priority. For organizations handling Personally Identifiable Information (PII), protected health information (PHI), or classified intellectual property (IP), the risk profile of public-cloud LLMs is often simply too high to accept.

The On-Premise Solution: Reclaiming Control and Sovereignty

The on-premise LLM deployment model directly addresses and resolves these profound privacy concerns by placing the entire AI infrastructure (the hardware, the software, the model weights, and the training data) under the direct and exclusive physical and digital control of the enterprise.

1. Absolute Data Sovereignty

Data Sovereignty means that data is subject only to the laws and governance rules of the country or organization where it is collected and stored.

- Geographic Control: With an on-premise setup, the hardware is physically located within the enterprise’s data center, often within a specific country or even a specific secure room. This intrinsically satisfies data residency and data localization requirements, making compliance with regulations like the EU’s GDPR or various national security laws straightforward.

- Zero External Transmission: All data processing, model training, and inference (querying the model) happen within the corporate firewall. Sensitive prompts and proprietary internal documents used by the LLM never leave the secure network environment.

2. Enhanced Security and Compliance

An on-premise deployment allows the security team to apply the organization’s existing, robust security framework directly to the AI system.

- Existing Security Infrastructure: The LLM solution can be seamlessly integrated with established corporate security tools, including Intrusion Detection Systems (IDS), sophisticated firewalls, identity and access management (IAM) solutions, and data loss prevention (DLP) systems. This is often more comprehensive and tailored than the security layers offered by a generic cloud service.

- Customized Access Control: Access to the model itself can be strictly controlled via internal corporate Active Directory or other enterprise IAM solutions. Only authorized employees, departments, or applications can interact with the model, and access logs are retained internally for audit purposes.

- Simplified Auditing: Regulatory compliance often necessitates detailed audit trails. Owning the entire stack lets the enterprise keep detailed logs of every model use, training task, and admin action, making compliance checks far easier for internal and external auditors.

3. Intellectual Property Protection

For enterprises, the true value of an LLM lies not just in the foundational model (like Llama, Mistral, or others) but in the proprietary knowledge used to fine-tune it. This fine-tuning data is the organization’s IP.

- Isolated Training Environment: When fine-tuning is conducted on-premise, the proprietary datasets (such as customer records, internal reports, patent filings, or product specifications) are never uploaded to a third party. The resulting fine-tuned model weights, which effectively encode the company’s knowledge, are also retained internally, preventing any external party from gaining unauthorized access to the core IP.

Need a compliant, on-premise LLM tailored to your industry? Let’s build it securely.

The Practicalities of On-Premise LLM Architecture

While the privacy benefits are compelling, deploying an on-premise LLM requires careful planning and investment in the underlying technology.

The Hardware Challenge: The Need for GPUs

LLMs are computationally demanding. The key enabler for efficient on-premise deployment is specialized hardware, primarily GPUs (Graphics Processing Units).

- Inference Power: To serve real-time user queries (inference), the LLM requires high-speed memory and parallel processing power provided by GPUs, often in dedicated server racks.

- Training and Fine-Tuning: Training a model from scratch is a massive undertaking, but fine-tuning (adapting an existing model) is achievable on fewer, powerful GPUs. The investment in this hardware is a capital expenditure (CapEx) that replaces the ongoing operational expenditure (OpEx) of cloud service fees, offering long-term cost predictability.

The Software Stack

The full on-premise solution involves more than just hardware; it requires a robust software and orchestration layer:

- Model Selection: Choosing an open-source, commercially viable foundational LLM (like Llama 3, Mistral, etc.) that can be legally downloaded and deployed on private infrastructure is the first step.

- Containerization (e.g., Docker/Kubernetes): To manage the complex deployment, the LLM and its dependencies are often packaged into containers. Orchestration tools like Kubernetes manage the cluster of GPU servers, ensuring scalability and high availability, much like a private cloud environment —often implemented by enterprises that Hire Cloud and DevOps Engineers to manage such complex AI infrastructure.

- Internal API Layer: An application programming interface (API) is built within the firewall, allowing internal applications (chatbots, coding assistants, data analysts) to send prompts to the LLM without ever touching the public internet.

Overcoming the Data Latency and Governance

On-premise solutions eliminate data latency and enhance governance for internal data sources.

- Real-time Internal Data Access: LLMs often need to access the most current internal data—a process called Retrieval-Augmented Generation (RAG). By being on-premise, the LLM has low-latency, high-bandwidth access to internal databases, data lakes, and document repositories, leading to more accurate, contextual, and timely results without the need for data transfer over the internet.

- Unified Governance: The data governance team can apply a single set of rules to the data, the RAG index, and the LLM itself, creating a unified and simpler compliance environment.

Strategic Advantages for Privacy-Conscious Industries

The move to an on-premise architecture is a strategic decision that signals a strong commitment to regulatory adherence and customer trust.

| Industry | Primary Privacy Concern | On-Premise Solution Benefit |

| Healthcare (HIPAA) | Protection of PHI and patient records. | Ensures PHI remains within a secure, dedicated environment; simplifies audit logs required for compliance. |

| Financial Services (GDPR, PCI-DSS) | Customer PII, transaction data, algorithmic IP. | Guarantees data residency and localization; protects proprietary trading algorithms and customer scoring models from external exposure. |

| Government / Defense | Classified, highly sensitive, or state-secret information. | Allows for deployment in air-gapped environments (networks physically isolated from the internet), ensuring absolute security. |

| Manufacturing / R&D | Intellectual Property (IP), proprietary designs, and trade secrets. | Prevents the leakage of unique manufacturing processes or product design details used during RAG or fine-tuning. |

Bridging the Gap with Professional IT Services

The decision to transition to an on-premise LLM architecture, while strategically sound for privacy, introduces significant operational complexity. It demands specialized skills in hardware procurement (specifically high-performance GPUs), setting up a robust, containerized environment (like Kubernetes), fine-tuning models on sensitive data, and integrating the LLM with existing enterprise systems. This is where a trusted IT services partner becomes invaluable.

WeblineIndia is a renowned IT agency in the US that specializes in providing the comprehensive expertise needed to navigate this transition seamlessly. They offer end-to-end professional services, from designing the optimal on-premise hardware stack tailored to your inference and training needs, to managing the secure deployment of open-source LLMs within your firewall.

Cloud experts at WeblineIndia’s development hub in India possess deep experience in secure cloud infrastructure and AI implementation. Enterprises can rapidly deploy their private LLM environment, ensuring strict adherence to internal compliance policies and accelerating time-to-value without the need to hire and train expensive, specialized in-house AI engineering teams, by leveraging experienced remote developers. This partnership transforms a challenging infrastructure project into a manageable, strategically executed initiative, fully dedicated to maintaining your absolute data sovereignty.

The Future is Private and Secure with WeblineIndia

The excitement around LLMs should not overshadow the fundamental responsibility enterprises have to protect the sensitive data entrusted to them. For any large organization where compliance, privacy, and data sovereignty are non-negotiable (which is to say, most successful enterprises today), the public cloud LLM model is a fundamental mismatch.

The shift to on-premise LLM deployment represents a clear path forward. It is the necessary investment in hardware and expertise that closes the security gap, satisfies the most stringent global regulatory requirements, and ultimately allows the enterprise to harness the full, transformative potential of Artificial Intelligence without ever compromising its most valuable asset: its data.

Contact WeblineIndia IT consultancy services to escape the inherent risks of the cloud and reclaim control over their AI stack to innovate boldly. You can assure customer trust, compliance standing, and intellectual property being absolutely secured within their own sovereign domain.

Social Hashtags

#OnPremiseLLM #DataSovereignty #EnterpriseAI #AICompliance #PrivacyFirst #SecureAI #LLMSecurity #EnterpriseTechnology

Want full control over your AI stack with zero data risk? Start with WeblineIndia.

Frequently Asked Questions

Testimonials: Hear It Straight From Our Global Clients

Our development processes delivers dynamic solutions to tackle business challenges, optimize costs, and drive digital transformation. Expert-backed solutions enhance client retention and online presence, with proven success stories highlighting real-world problem-solving through innovative applications. Our esteemed Worldwide clients just experienced it.

Awards and Recognitions

While delighted clients are our greatest motivation, industry recognition holds significant value. WeblineIndia has consistently led in technology, with awards and accolades reaffirming our excellence.

OA500 Global Outsourcing Firms 2025, by Outsource Accelerator

Top Software Development Company, by GoodFirms

BEST FINTECH PRODUCT SOLUTION COMPANY - 2022, by GESIA

Awarded as - TOP APP DEVELOPMENT COMPANY IN INDIA of the YEAR 2020, by SoftwareSuggest